From, knowing where these machines came from is crucial to knowing where our world is going. The history of the computer is the fastest technological explosion in human history. In a blink of an eye, we went from wood beads to artificial intelligence. Whether you are the ultimate technology geek or just plain interested in where those little tools you use every day come.

Pre-Electronic Era: The Begin

Before the advent of electricity, humans looked for a means of automating calculations in order to avoid errors in both business and navigation.

Abacus (Ancient times)

This was the first “computer,” using beads to denote the number.

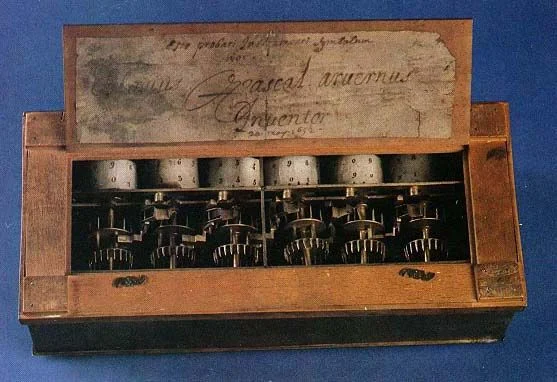

The Pascaline (1642)

Blaise Pascal developed the first mechanical computer that could perform addition and subtraction by creating a series of gears and wheels.

The Victorian Computing Era (1800s)

Well before electricity was employed in logic, mathematicians developed “engines,” purely mechanical models with a distinct blueprint that spawned everything we have today.

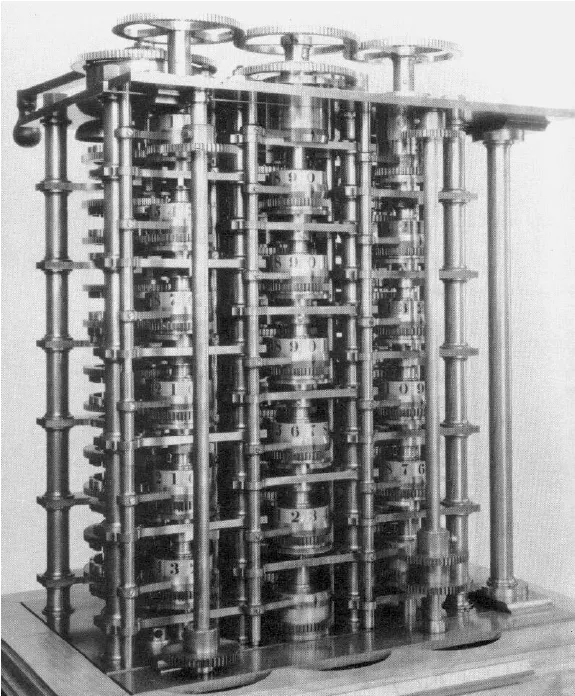

The Difference Engine(1822)

This was designed by Babbage in 1822 for computing mathematical tables. The machine was huge and consisted of brass and iron gear components.

The Analytical Engine (1837)

This was the masterwork of Babbage. This showed the first design of a general-purpose computer. It consisted of a “Mill” (CPU) and a “Store” (Memory)The Analytical Engine (1837): Developed by Charles Babbage (known as the “Father of the Computer”), this was the first design for a general-purpose computer. The Analytical Engine had elements such as the Arithmetic Logic Unit (ALU), control flow, and memory. However, it was not complete during the lifetime of Charles Babbage.

Ada Lovelace: She is the world’s first computer programmer because of the algorithm that she wrote designed specifically for Charles Babbage’s machine..

The Turing Machine 1936

Alan Turing envisioned a theoretical “Universal Machine” that would be capable of emulating any algorithm. It was now clear that a single machine could be programmed for any given task rather than have one built for every different problem.

Boolean Logic (1847)

George Boole developed “Boolean Algebra,” where all values are boiled down to True or False – 1 or 0. This is the mathematical language that every computer today uses.

Information Theory (1948)

Claude Shannon, the “Father of Information Theory,” showed that all information-text, pictures, audio-could be represented using only bits (0s and 1s).

Tabulating Machine of Hollerith (1890)

Tabulating Machine of Hollerith (1890): The punch card method of automation in the 1890 Census of the USA was adopted by Herman Hollerith. This resulted in information being “read” into a machine for the first time in history, and a company was born that would later become IBM.

The War-Time “Brain” 1930s–1945

World War II accelerated the development of computer science by decades. The goal was no longer just calculation, but even more encryption and, mostly, code-breaking.

Z3 (1941)

Built by Konrad Zuse in Germany. It was the first functioning, program-controlled, fully automatic digital comput

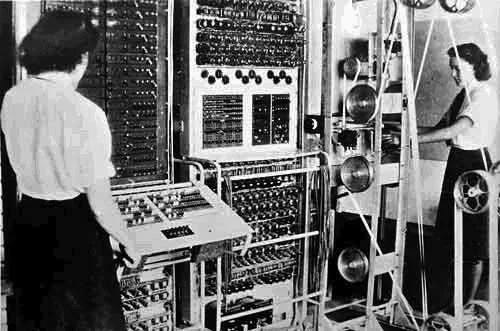

Colossus, 1943

Constructed by British codebreakers-which included the influence of Alan Turing-this was the world’s first electronic digital programmable computer, used specifically to crack German High Command codes.

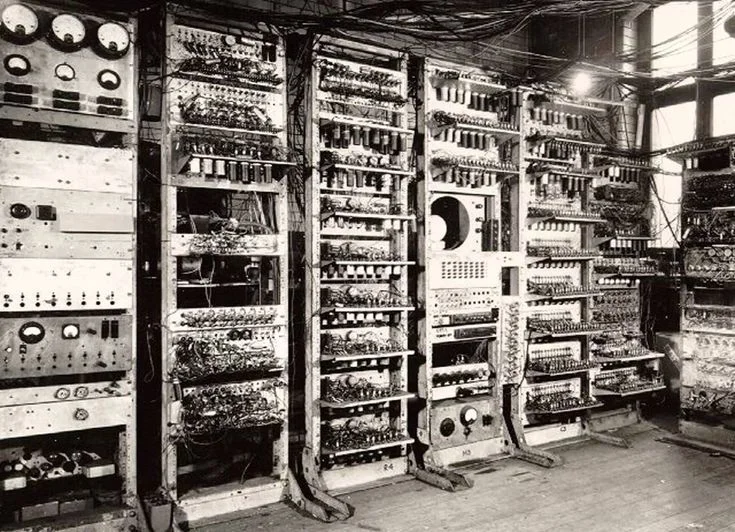

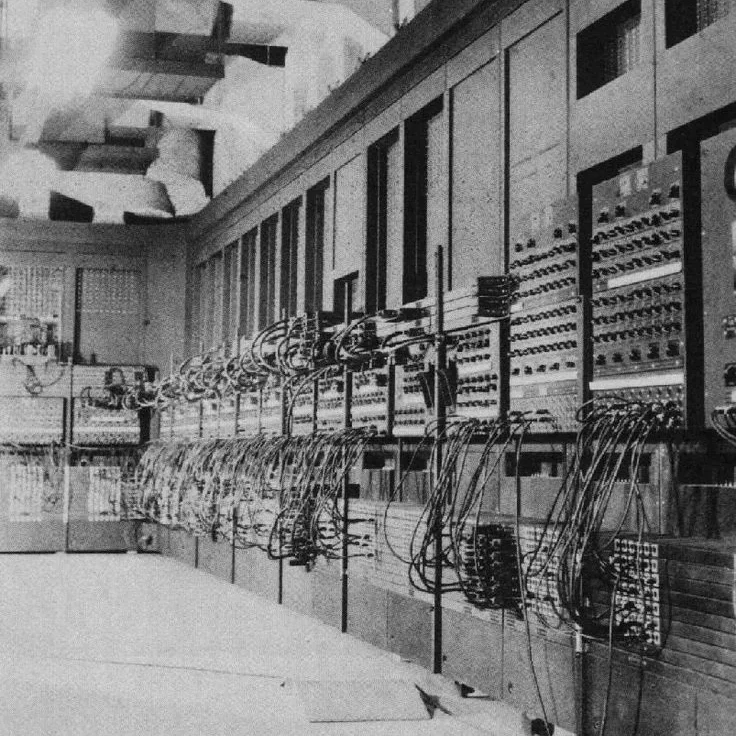

ENIAC (1945)

This was the “giant brain.” It occupied 1,800 square feet and used 18,000 vacuum tubes. It could perform 5,000 additions in one second-a miracle at that time.ENIAC is known to be the Electronic Numerical Integrator and Computer. This was the world’s first general-purpose programmable electronic digital computer. The main purpose of this device was to calculate artillery firing tables.

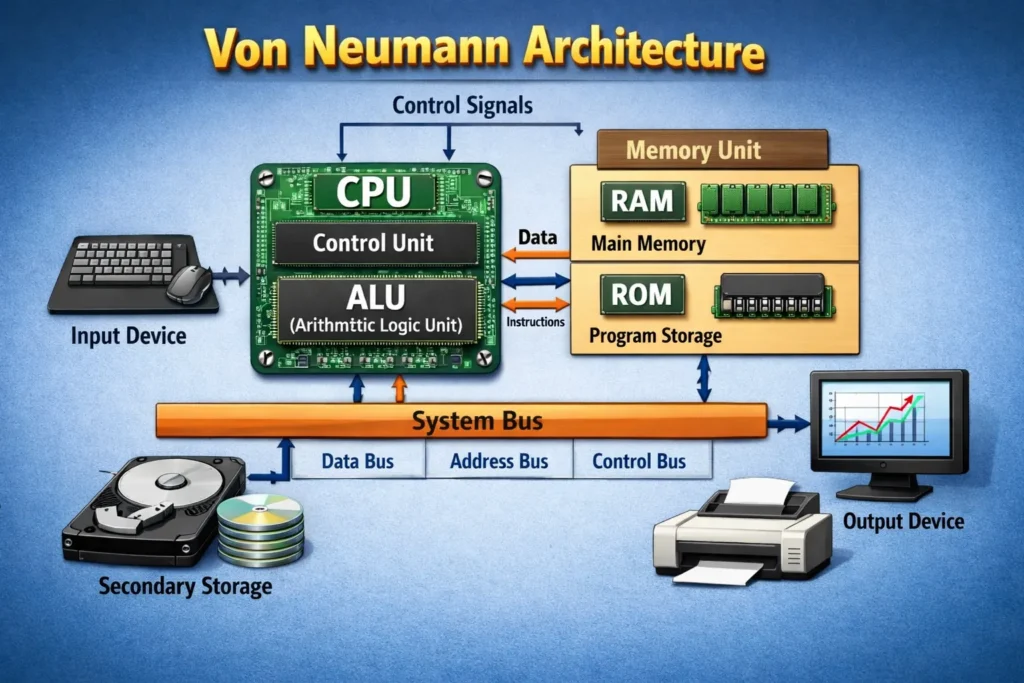

Architectural Evolution: The Von Neumann Model

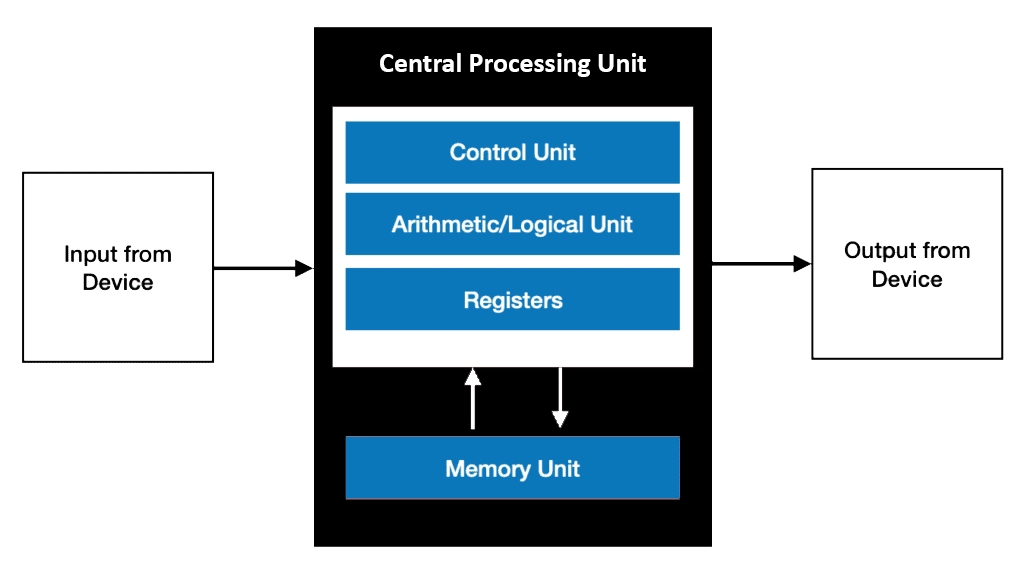

In 1945, John von Neumann designed a concept that almost every computer today still practically uses.

The Stored-Program Concept: Previously, computers had to be rewired physically each time there was to be a change in the task. Von Neumann suggested that data and instructions all have to be stored in the same memory.

The Elements

He identified the three necessary parts:

CPU: Central Processing Unit – this is the “brain” that contains the ALU and Control Unit.

Memory: holds the running programs and data.

I/O (Input/Output): To communicate with the outside world.

Second Generation: Transistors

The discovery of the Transistor in 1947 by Bell Labs forever altered the landscape. This innovation enabled the replacement of the vacuum tube used in computers with the Transistor, making computers smaller, faster, more affordable, and more energy-efficient.

Key Change: For the first time, users were working with keyboards and computer screens that were directly connected to an operating system, rather than punch cards and printouts

Programming Languages

The period also marked a transition from binary machine language to symbolic language, or assembly languages, and high-level languages such as COBOL and FORTRAN.

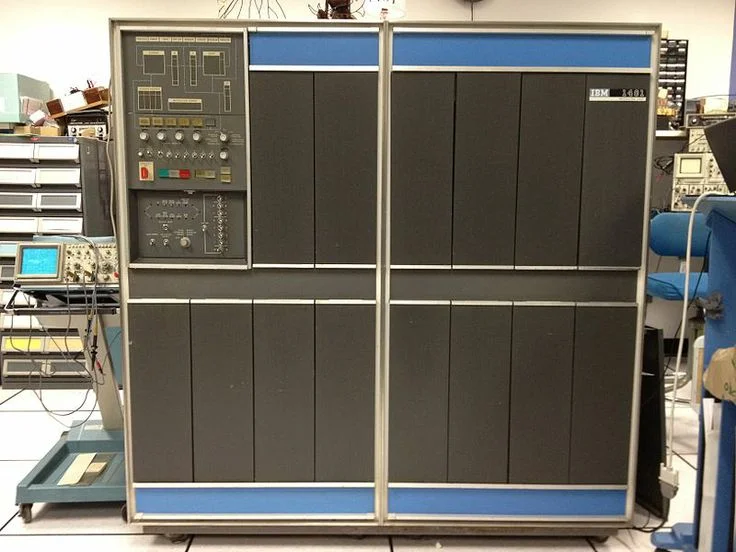

IBM 1401

This computer was widely used in the corporate sector, facilitating the shift towards the use of digital records in many sectors.Furthermore, the IBM 1401 computer was compatible with the IBM 7090 computer

The Transistor & Mainframe Era (1950s–1960s)

First Generation: Vacuum Tubes

In 1947 the transistor was invented at Bell Labs. The transistor replaced the fragile, hot vacuum tubes with small, reliable switches.

UNIVAC I (1951)

This was the first commercially sold computer and is famously known to have predicted the 1952 U.S. Presidential Election results on live TV.The Universal Automatic Computer was the first computer to be delivered to a business customer (the U.S. Census Bureau).

The Software Revolution: From Machine to Human

Early computers were “hard” to talk to. Progress in software made them accessible.

First Compiler (1952): Grace Hopper-it is said, the “Mother of Computing”-produced the first compiler, a program that will translate human-like words into machine code. This led to COBOL, the first language for business.

Operating Systems: Early computers in the 1960s ran one job at a time. The Multics developments and later Unix (by Ken Thompson and Dennis Ritchie) enabled computers to handle multitasking and multiple users.

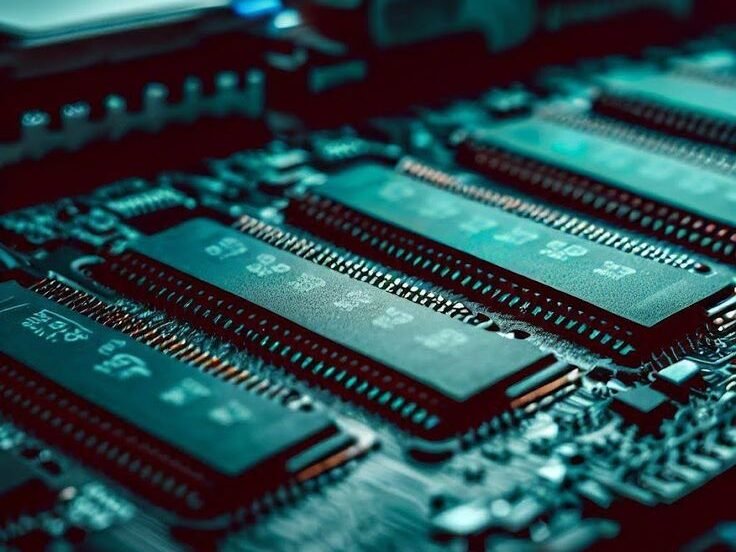

Integrated Circuits (1958)

Jack Kilby (Texas Instruments) and Robert Noyce (Fairchild Semiconductor) independently figured out how to put multiple transistors onto one “chips”.

The Shrinking Era (1960-65)

IBM System/360 (1964)

The most important mainframe in history. It allowed companies to upgrade their hardware without having to rewrite all their software, a concept called compatibility.

Packet Switching (1960s)

Leonard Kleinrock, and others developed a method to divide data into small “packets” in order to efficiently send the data across the networ

ARPANET (1969)

It was an early packet switching network and the first network developed to serve as the foundation for the modern-day Internet Development. The first message sent over it was between UCLA and Stanford.

Third generation of computers (1964-71)

Third-Generation Computers (approximately 1964-1971) saw a massive improvement with the development of the Integrated Circuit (IC) technology, which replaced transistors to result in compact, fast, reliable, and affordable systems with the keyboard/monitor concept and time-sharing/multiprogramming operating systems or high-level languages COBOL and FORTRAN support. This generation of computers can be attributed to the development of the IBM 360 computer system

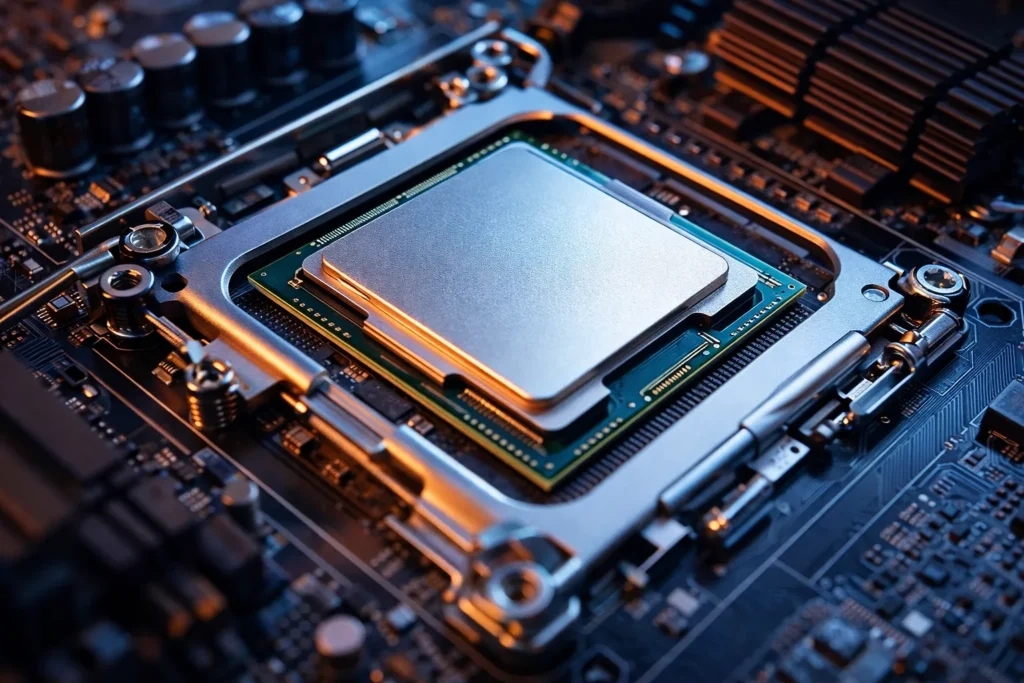

The Microprocessor & PC Revolution (1970s–1980s)

In 1971 Intel introduced the 4004 Microprocessor, which contained the entire CPU on a single small piece of silicon. This invention made it possible to build “Personal Computers”.

C Programming Language (1972)

Dennis Ritchie developed ‘C’, which became the “mother tongue” of software. In fact, most modern systems – including Windows, macOS, and Linux have been built using it.

Altair 8800( 1975)

The first “home computer” kit. It had no screen or keyboard—just switches—but it inspired Bill Gates and Paul Allen to write BASIC, launching Microsoft.

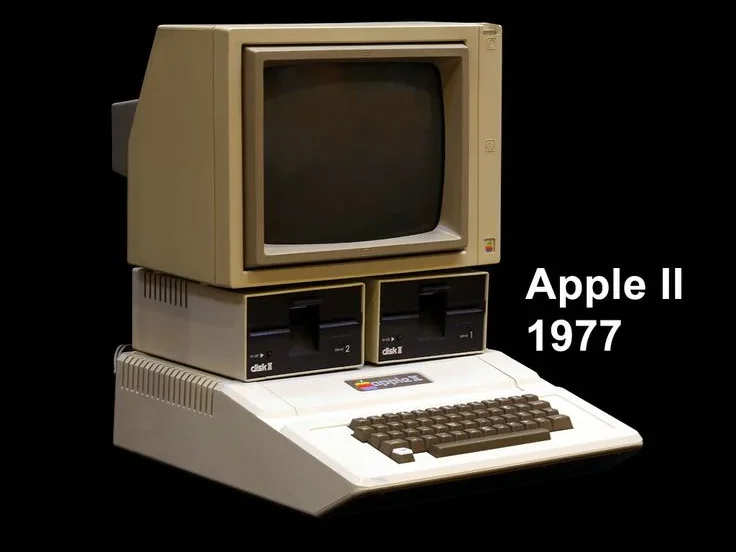

Apple II (1977)

Apple II, 1977: Steve Jobs and Steve Wozniak introduced the first commercially successful “pre-assembled” personal computer that could display color graphics.

The IBM PC (1981)

The IBM PC (1981): IBM’s entry into the home market standardized the “PC” architecture and made MS-DOS the dominant operating system.

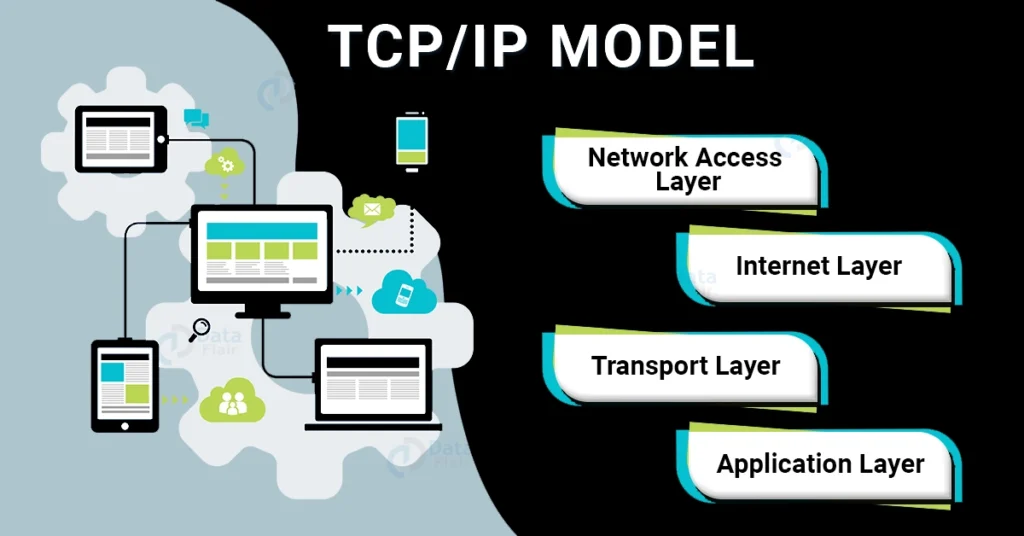

TCP/IP( 1983)

Vint Cerf and Bob Kahn developed the “language” of the internet. On January 1, 1983, all networks on ARPANET converted to using it, and thus the Internet was born.

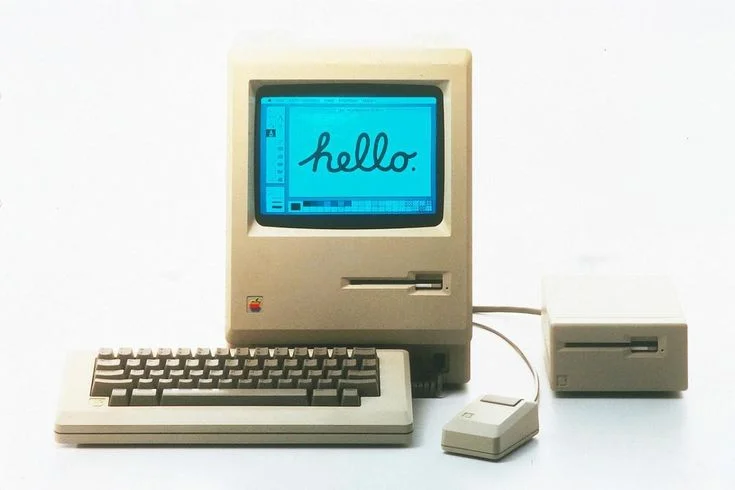

Macintosh Computer(1984)

Apple introduced the first commercially successful computer to utilize a Graphical User Interface(GUI) and mouse, which replaced the input of typed text commands. This is the machine that ended the practice of typing commands because of the introduction of the Graphical User Interface and the mouse. It was the first successful computer that featured these two technologies.

The World Wide Web (1989)

Tim Berners-Lee, while working at CERN, developed HTML, HTTP, and URLs. In this manner, the “technical” Internet transformed into a visual “Web”, which anybody could use.

Fourth Generation: Microprocessors (1970s)

This is an age defined by Microprocessor, an age in which thousands, and later on, billions, of integrated circuits were packed into a small silicon Chip.

Intel 4004: It is considered to be the first microprocessor, which combined all the parts of a computer into a chip. It was made by Intel Corporation in 1971.

The PC Revolution: The result of these technologies was the development of the personal computer. Then, in 1981, the first PC was released by IBM, and in 1984, the Macintosh computer was released.

The Internet and GUI: The development of Graphical User Interfaces (GUIs) and the World Wide Web in the 1990s made it imperative to have computers in the household.

The Connected Age (1990-2020)

Operating Systems

The 1990s opened with personal computers improving in speed and power from the Intel 80386 microprocessor to the i486 and the introduction of the RISC (Reduced Instruction Set Computer).Computing went from being a device for use on your desk to a gateway to the world. From 1993, the Mosaic browser enabled a graphical user interface

Microsoft brought out Windows 3.0, which was launched in May 1990, and it provided a user-friendly graphical user interface and improved performance, thus solidifying the position of the Windows operating system for PC-compatible computers. Apple also continued to develop the Mac OS, and Linux started to develop as an alternative to it.After 2000, Apple released their new operating system, Mac OS X, in 2001, emphasizing a strong, Unix-based infrastructure. In reaction, Microsoft released their new operating system, Windows XP, which proved to be a huge success and lived for a long time. The growth of the World Wide Web ensured that people interacted in a different way, which led to the emergence of other online platforms, such as YouTube and Facebook, later in the next decade.

Hardware

Laptops began to be made more portable, transforming from “luggable” computers to lighter and faster versions like the ThinkPad 700C from IBM in 1992. The initial Power PC chips, developed jointly by IBM, Motorola, and Apple, appeared in 1993.

Portability

Personal Digital Assistants (PDAs) like the Palm Pilot, launched in 1997, introduced the notion of computing power in portable devices

Contribution of Wi-Fi technology

In 1999, Wi-Fi technology started gaining popularity and enabled people to access the internet without having to use cables. LCD monitors gained popularity as an alternative to the bulky cathode ray tubes used in monitor manufacturing. The capacity of flash memory expanded.

Mobile Shift

The era of computing initiated a significant transition to smaller computing devices. The late 2000s started a revolution in smartphones, led by Android and Apple’s iOS, a period that altered how people interacted with information and computers in their day-to-day lives.The iPhone was a way to take effectively a 1990s-era supercomputer and put it in everyone’s pocket.

The 2010s: Artificial Intelligence, 5G

The period before the year 2020 witnessed gradual advancements in hardware technology, and the emergence of new “frontiers” in computing.

Miniaturization

Advancements in the manufacturing of semiconductors enabled the creation of smaller transistors, leading to more powerful chips. This enabled the processing power found in mainframes to reside in consumer products.

New Technologies

There was an increased interest in new technologies such as artificial intelligence (AI).

Hardware Milestones

In the year 2020, Apple had made a radical change with the launch of the M1 Chipset, an extremely powerful ARM-based SoC solution for their MacBooks and Mac Mini series of computers, marking the end of the use of Intel chips in these products.

Connectivity

The development of 5G connectivity in 2019 and 2020 ensured faster data transfer rates, opening the way to the development of complex applications and smart connectivity between various devices.

Cloud Computing 2006+

AWS launched; this allowed users to get enormous computing power via the internet, rather than via their own devices.

The Intelligent Era (2020s–Future)

We’re currently in the middle of the fastest-moving change in computer history.

Generative AI (2022-present)

Large Language Models (LLMs) like Gemini and GPT introduced a full role reversal of the computer from “processor” to “reasoner.”

The fifth-generation computer focuses on the following areas:

Quantum Supremacy

Now, in 2025, computers have achieved such a level that they solve problems with qubits in seconds, which current supercomputers would take 10,000 years to solveWe’re currently in the middle of the fastest-moving change in computer history. current supercomputers would take 10,000 years to solve.

Fifth Generation: AI and Quantum (Present & Beyond)

- Parallel processing

- Artificial inteligence

Machine Learning: Systems capable of improving with time without being programmatically coded.

Quantum Computing: An emerging domain that relies on the rules of quantum mechanics for calculations that cannot be solved on classical computers.

Cloud Computing: A shift of computing capability from personal hardware to vast networks of servers. THE history of computing from the initial idea of computing that sparked in the 1800s to the microchip world we are living in now.